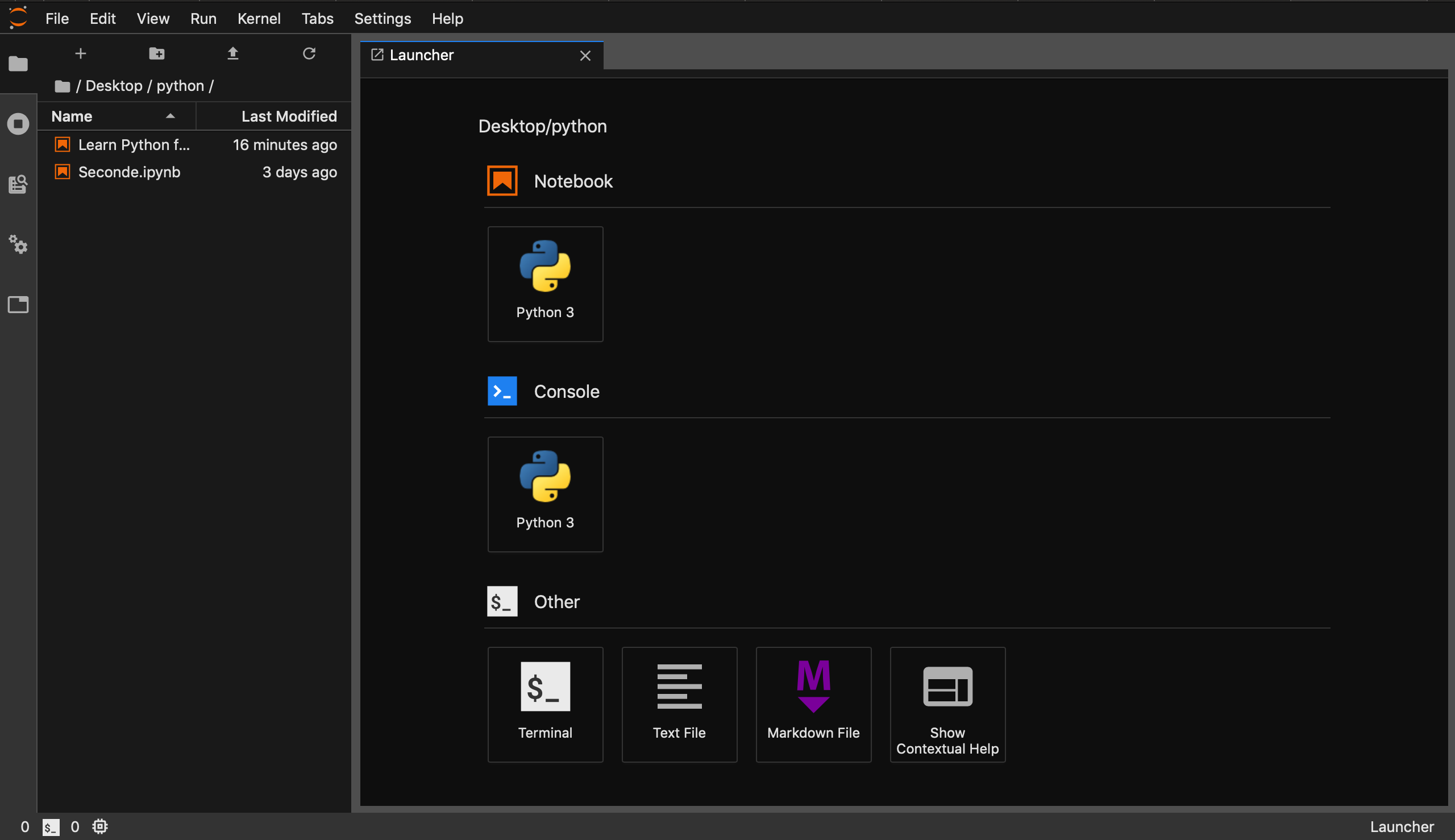

In the notebook, select the remote kernel from the menu to connect to the remote Databricks cluster and get a Spark session with the following Python code:įrom databrickslabs_nnect import dbcontext To work with JupyterLab Integration you start JupyterLab with the standard command: JupyterLab Integration follows the standard approach of Jupyter/JupyterLab and allows you to create Jupyter kernels for remote Databricks clusters (this is explained in the next section).

Using a remote cluster from a local Jupyterlab If you want to try it yourself, the last section explains the installation. It then provides an end to end example of working with JupyterLab Integration followed by explaining the differences to Databricks Connect. This blog post starts with a quick overview how using a remote Databricks cluster from your local JupyterLab would look like. Mirror a remote cluster environment locally (python and library versions) and switch seamlessly between local and remote execution by just selecting Jupyter kernels.

#UPDATE JUPYTERLAB CODE#

Run remote Spark jobs with an integrated user experience (progress bars, DBFS browser.Run deep learning code on Databricks GPU clusters.Execute single node data science Jupyter notebooks on remote clusters maintained by Databricks with access to the remote Data Lake.

Data scientists can use their familiar local environments with JupyterLab and work with remote data and remote clusters simply by selecting a kernel.Įxample scenarios enabled by JupyterLab Integration from your local JupyterLab: The project JupyterLab Integration, published in Databricks Labs, was built to bridge these two worlds. So companies more and more leverage solutions in the cloud and data scientists have the challenge to combine their existing local workflows with these new cloud based capabilities. These capabilities are hard to provide on premises in a flexible way. On the other side, the available data is growing exponentially and new capabilities for data analysis and modeling are needed, for example, easily scalable storage, distributed computing systems or special hardware for new technologies like GPUs for Deep Learning. For many years now, data scientists have developed specific workflows on premises using local filesystem hierarchies, source code revision systems and CI/CD processes.

0 kommentar(er)

0 kommentar(er)